Graphs in Machine Learning

Main Topics

Course Description

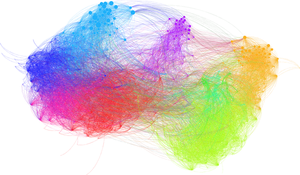

The graphs come handy whenever we deal with relations between the objects. This course, focused on learning, will present methods involving two main sources of graphs in ML: 1) graphs coming from networks, e.g., social, biological, technology, etc. and 2) graphs coming from flat (often vision) data, where a graph serves as a useful nonparametric basis and is an effective data representation for such tasks as spectral clustering, manifold or semi-supervised learning. We will also discuss online decision-making on graphs, suitable for recommender systems or online advertising. Finally, we will always discuss the scalability of all approaches and learn how to address huge graphs in practice.

The lectures will show not only how but mostly why things work. The students will learn relevant topics from spectral graph theory, learning theory, bandit theory, graph neural networks, necessary mathematical concepts and the concrete graph-based approaches for typical machine learning problems. The practical sessions will provide hands-on experience on interesting applications (e.g., online face recognizer) and state-of-the-art graphs processing tools.

Organization

General Syllabus

The following is a comprehensive syllabus combining all topics covered across all years of the course.

L0 Overview

- Short presentation of the course

- Course logistics and expectations

L1 Introduction to Graphs in ML

- Introduction Course overview: natural and constructed graphs. Applications: recommender systems, semi-supervised learning.

- Natural Graphs Natural graphs from social networks: examples, applications, product placement problem, submodularity concepts.

- Graph Theory Basics Graph theory refresher: Seven Bridges of Königsberg (Euler 1735), Eulerian circuits, basic concepts.

- Similarity Graphs Introduction Constructed graphs from similarity networks: flat data to graph abstraction. Applications: vision, audio, text, SSL.

- Similarity Graph Types Three approaches: ε-neighborhood, k-NN, fully connected graphs. Computational bottlenecks, approximate nearest neighbors.

L2 PageRank & Natural Graphs

- Google PageRank: Introduction Google PageRank: ranking web pages by importance. Random surfer model, dangling pages, teleportation solution.

- Google PageRank: Core Algorithm Steady state vector: eigenvector with eigenvalue 1. Perron's theorem guarantees existence and uniqueness.

- Google PageRank: Computation Power method: iterative updates until convergence. Computational tricks for sparse matrices, large-scale implementation.

- Natural Graphs Examples Natural graphs: biological, information, utility networks. Real-world datasets: OGB, SNAP. Constructed graphs from flat data.

- Random Graph Models Random graph models: Erdős–Rényi, Barabási–Albert (preferential attachment), Stochastic Block Model, Watts-Strogatz, Chung-Lu, Fiedler.

- Small-World Phenomena Erdős number project: collaboration graph, six degrees of separation. Small-world phenomenon, network properties.

L3 Graph Laplacian & Spectral Clustering

- Graph Laplacian Basics Graph Laplacian L = D - W: definition, properties, smoothness. Eigenvalue 0 equals connected components.

- Normalized Laplacians Three Laplacian variants: unnormalized, symmetric normalized, random walk. Smoothness formulations, eigenpair relationships.

- Laplacian and Random Walks Random walks: transition probabilities, transition matrix, stationary distribution. Connection to PageRank, harmonic functions.

- Spectral Clustering: Background Graph-based clustering motivation. Background: Kleinberg's axioms, k-means limitations. Clustering paradigms: compactness vs connectivity.

- Spectral Clustering: Graph Cuts Clustering via graph cuts: MinCut, RatioCut, Normalized Cut objectives. NP-hard problems, balanced cuts.

- Spectral Clustering: Relaxation Details Detailed relaxation: discrete cluster assignment → continuous optimization. Graph function representation, eigenvector solution.

- Spectral Clustering: RatioCut & NCut Complete treatment: relaxation from discrete to continuous, Rayleigh-Ritz theorem, RatioCut and Normalized Cut approximations, approximation guarantees, 1D examples.

- Spectral Clustering: Examples & Understanding 1D examples: spectral clustering, eigenvector interpretation, visualizations. Compactness vs connectivity paradigm.

- Spectral Clustering: Additional Examples Additional examples, visualizations. Elbow rule/EigenGap heuristic for choosing clusters. Approximation guarantees and limitations.

L4 Manifold Learning & Similarity Construction

- Manifold Learning Dimensionality reduction: low-dimensional embeddings preserving local structure. Laplacian Eigenmaps algorithm.

- Similarity Graphs Construction Building graphs from vectorial data: fully connected, ε-neighborhood, k-NN graphs. Computational bottlenecks, scalability.

- Movie Recommendations Movie recommendation on bipartite graphs: compute scores using graph distances. Effective resistance solution.

- Resistive Networks Electrical network interpretation: edges as conductors, Ohm's law. Effective resistance properties: metric.

- Effective Resistance Computation Kirchhoff's Law: flow in = flow out. Laplacian connection, harmonic functions, Moore-Penrose pseudo-inverse computation.

L5 SSL Foundations

- SSL Introduction SSL: few labeled, many unlabeled examples. Cluster, smoothness, manifold assumptions. MinCut SSL relaxation.

- SSL Harmonic Functions Harmonic function solution: unique smooth solution via graph Laplacian. Random walk interpretation, iterative propagation.

- SSL Regularization Regularized harmonic functions: sink node, soft solution, stability bounds. Out-of-sample extension, manifold regularization.

- SSL Manifold Regularization Manifold regularization: empirical risk, RKHS norm, graph smoothness. LapRLS, LapSVM, inductive learning.

L6 SSL Advanced & Graph Sparsification

- Inductive Bounds Inductive generalization bounds: transductive and inductive errors. VC dimension analysis, MMGC algorithm, theoretical guarantees.

- Transductive Bounds Transductive generalization bounds: stability analysis, β-stability. Error rate O(n_l^(-1/2)) achievable.

- LapSVMs & Max-Margin Laplacian SVMs: max-margin learning with graph regularization. Max-Margin Graph Cuts algorithm.

- SSL Learnability When does graph-based SSL provably help? Minimax analysis, VC dimension, conditions for gap.

- Cut Graph Sparsifiers Cut sparsifiers (Benczúr-Karger): preserve all cuts within (1±ε). Importance sampling algorithm, O(n log n/ε²) edges.

- Spectral Graph Sparsifiers: Theory Spectral sparsifiers (Spielman-Teng): preserve Laplacian quadratic form. Effective resistance, randomized algorithm, Matrix Chernoff bounds.

- Spectral Graph Sparsifiers in ML Spectral sparsifiers in ML: Laplacian smoothing, denoising. Computational improvements: O(m) → O(n log n) space.

L7 Online SSL & Large-Scale Processing

- Online SSL Introduction Online vs offline learning: incremental unlabeled examples. Computational challenge: cost grows with graph size.

- Online SSL: Graph Quantization Graph quantization: keep k centroids to limit memory. Charikar's incremental k-centers algorithm, compact representation.

- Online SSL Applications Applications: online face recognition, video analysis. Experimental results, comparison with offline methods.

- Online SSL Analysis Three error sources: generalization, online, quantization. Quantization error analysis, regret O(n_l^(-1/2)) achievable.

- Large-Scale Introduction Computational bottlenecks: space O(m) to O(n²), time O(n²) construction. Real-world scale: billions of pages.

- Distributed Processing Introduction Distributed graph processing: scalability and consistency challenges. Computation models: MapReduce, MPI, Pregel, GraphLab.

- GraphLab Abstraction GraphLab abstraction: immutable graphs, asynchronous computation, functional programming. Applications: large-scale label propagation.

L8 Advanced Topics

- Submodularity: Theory Submodular functions: diminishing returns property. Greedy algorithm with (1-1/e) approximation guarantee.

- Submodularity: Applications Product placement on social networks: flip coins for "live" edges. Greedy outperforms centrality measures.

- Graph Bandits Graph bandits introduction, observability graphs, side observations, and online non-stochastic rewards (Exp3).

- Spectral Bandits Spectral bandits: Laplacian eigenvectors reduce dimension from N to d. SpectralUCB algorithm, regret bounds.

- Influence Maximization Influence maximization as revealing graph bandit. BARE algorithm achieves better regret bounds.

TD1Spectral Clustering

- 📄 Handout (PDF) Graph construction and spectral clustering practice

- 💻 Python Code: build_similarity_graph.py, spectral_clustering.py, image_segmentation.py

- 📊 Data: four_elements.bmp, fruit_salad.bmp

TD2Face Recognition with SSL

- 📄 Handout (PDF) Semi-supervised learning and harmonic function solution

- 💻 Python Code: harmonic_function_solution.py, face_recognition_hfs.py, online_ssl.py

- 📊 Data: 10 faces, 2-moons, 2-moons (large)

TD3Graph Neural Networks

- 📄 Handout (PDF) Neural Relational Inference for Interacting Systems

- 💻 Code Repository: NRI Practical Session on GitHub

- 📖 Topics: Edge type estimation, trajectory prediction, encoder-decoder architecture, LSTM baseline comparison