Current Research

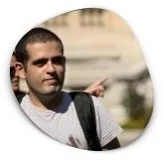

RLHF and LLM Alignment Active

We develop methods for aligning large language models (LLMs) with human preferences. Our main contribution is Nash Learning from Human Feedback (NLHF), an alternative to RLHF that learns a preference model and finds the Nash equilibrium policy. We also develop online preference optimization, offline alignment methods (GPO), decoding-time realignment, and theoretical frameworks for understanding preference learning. Our work has been applied to Llama 3 and addresses challenges in reward modeling, multi-sample comparisons, and metacognitive capabilities of LLMs.

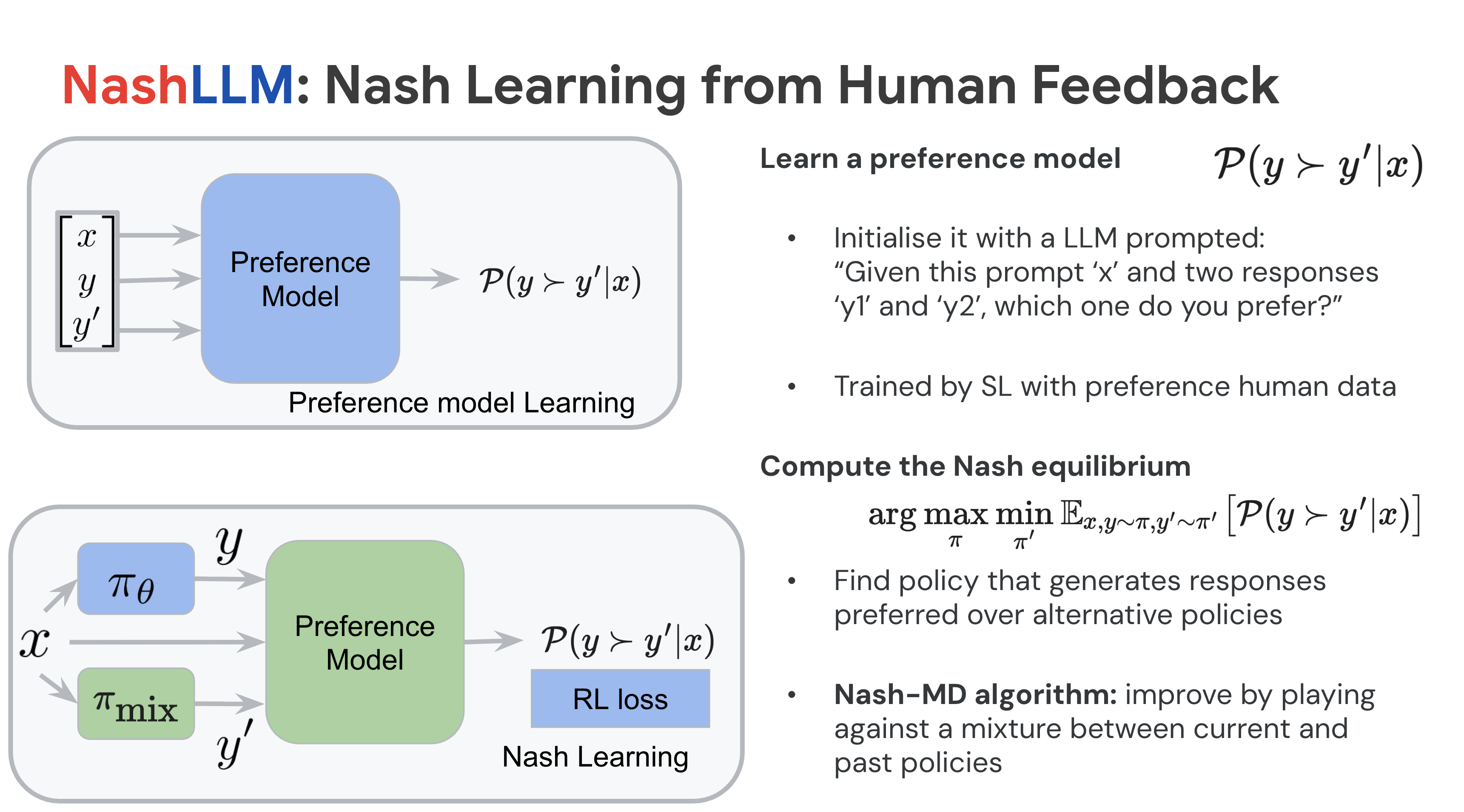

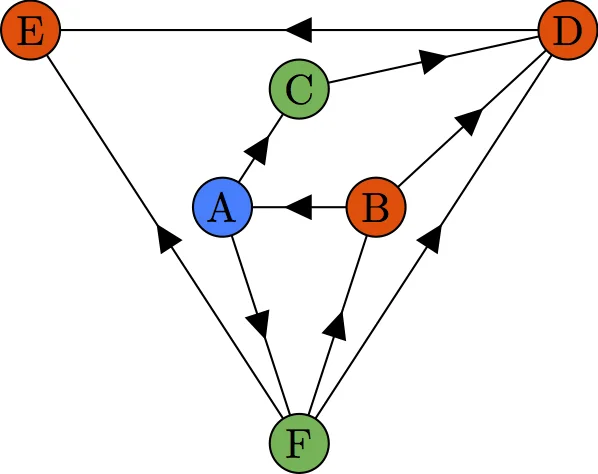

Zero-Sum Games and Imperfect Information Active

We develop algorithms for learning optimal strategies in zero-sum games with imperfect information. Our contributions include model-free learning for partially observable Markov games, adaptive algorithms for extensive-form games with local and adaptive mirror descent, and last-iterate convergence guarantees for uncoupled learning. Our work on adapting to game trees in zero-sum imperfect information games received the outstanding paper award at ICML 2023. We also study multiagent evaluation under incomplete information.

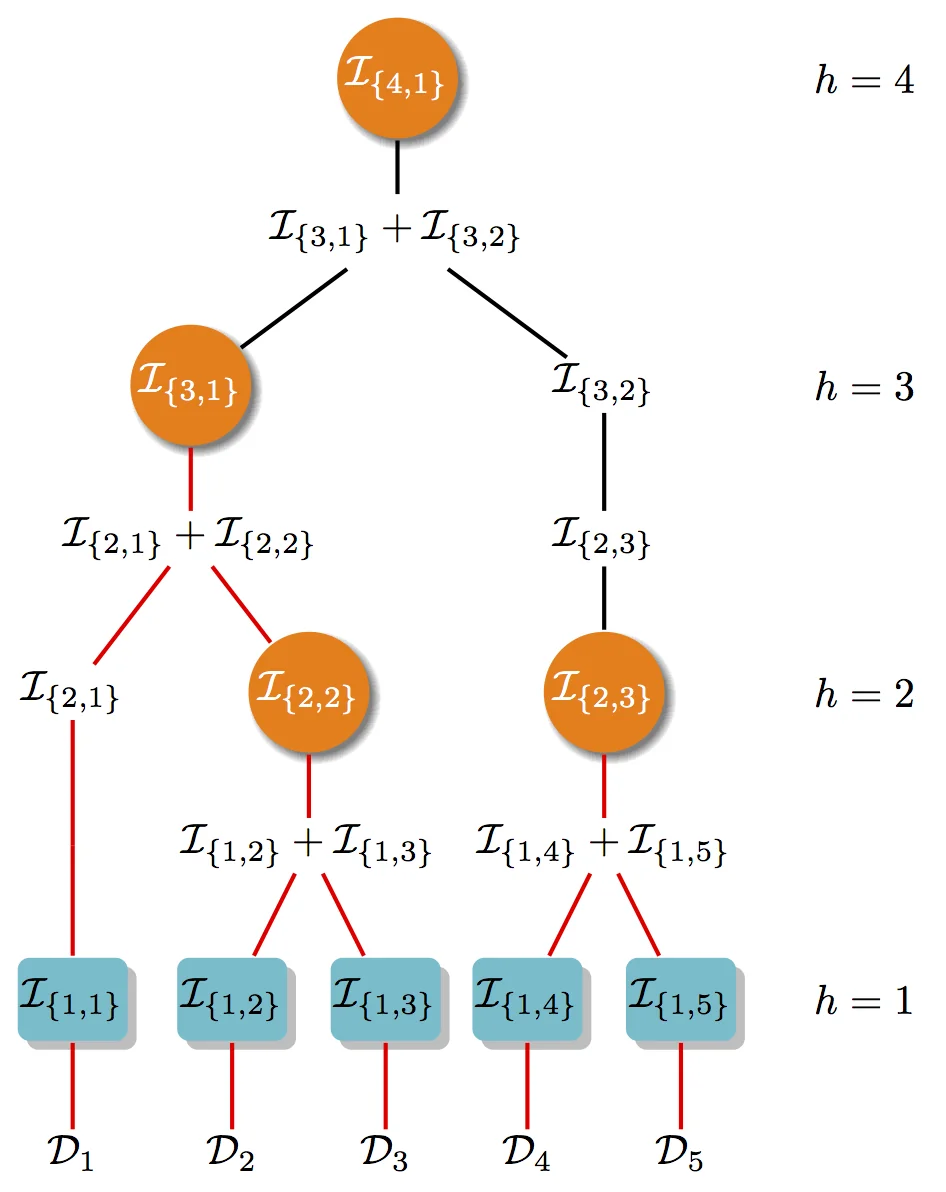

Sample efficient Monte-Carlo tree search Active

2011 – presentMonte-Carlo planning and Monte-Carlo tree search has been popularized in the game of computer Go. Our first contribution are generic black-box function optimizers for extremely difficult functions with guarantees with main application to hyper-parameter tuning. The second set of contributions is in planning, including TrailBlazer, an adaptive planning algorithm in MDPs. We developed parameter-free and adaptive approaches to optimization, scale-free online planning, and adaptive MCTS methods.

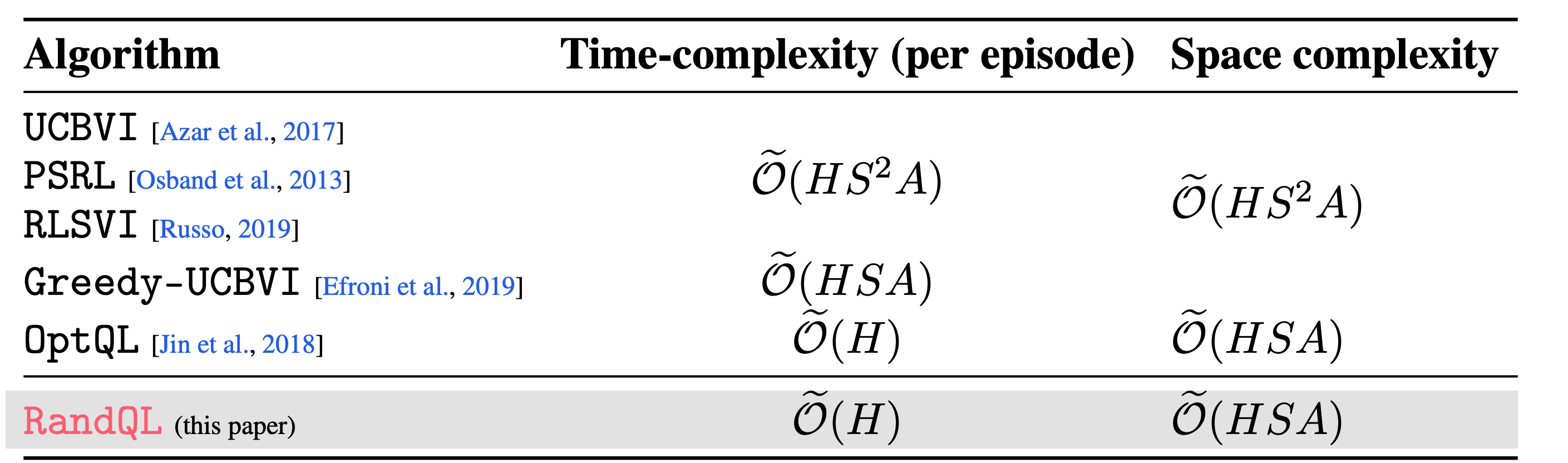

Posterior Sampling and Bayesian RL Completed

We develop posterior sampling methods for reinforcement learning that achieve optimism without explicit bonus terms. Our contributions include optimistic posterior sampling with tight guarantees, model-free posterior sampling via learning rate randomization, fast rates for maximum entropy exploration, and demonstration-regularized RL. We also provide sharp deviation bounds for Dirichlet weighted sums and new bounds on cumulant generating functions of Dirichlet processes, which are fundamental for analyzing Bayesian algorithms.

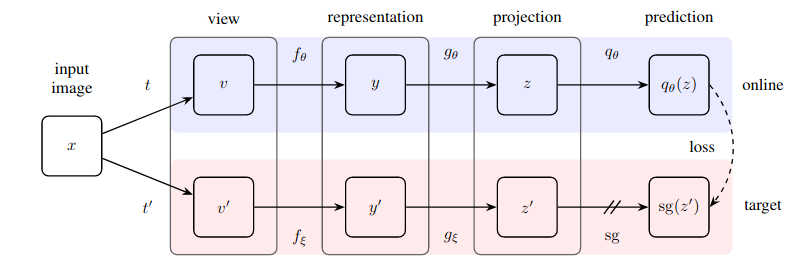

Self-Supervised Learning and BYOL Completed

We develop self-supervised learning methods that learn representations without labeled data. Our flagship contribution is Bootstrap Your Own Latent (BYOL), a self-supervised approach that avoids contrastive learning by having a target network bootstrap the online network's representations. BYOL achieves state-of-the-art results in image representation learning and has been extended to exploration in reinforcement learning (BYOL-Explore), video learning, graph representation learning, and neuroscience applications.

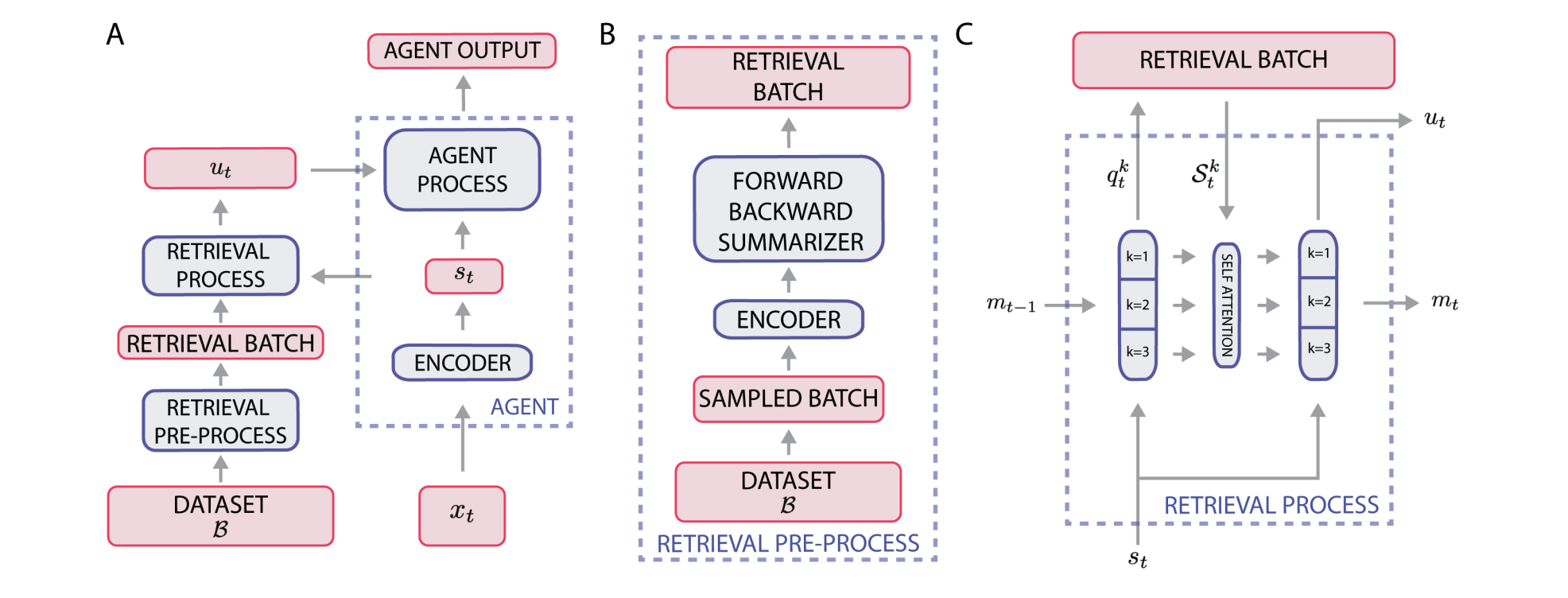

Exploration and Intrinsic Motivation Completed

We develop methods for exploration in reinforcement learning using intrinsic motivation and learned representations. Our contributions include unlocking the power of representations in long-term novelty-based exploration, curiosity in hindsight for stochastic environments, density-based bonuses on learned representations for reward-free exploration, geometric entropic exploration, quantile credit assignment, and retrieval-augmented reinforcement learning. These methods enable effective exploration in sparse-reward or reward-free environments.

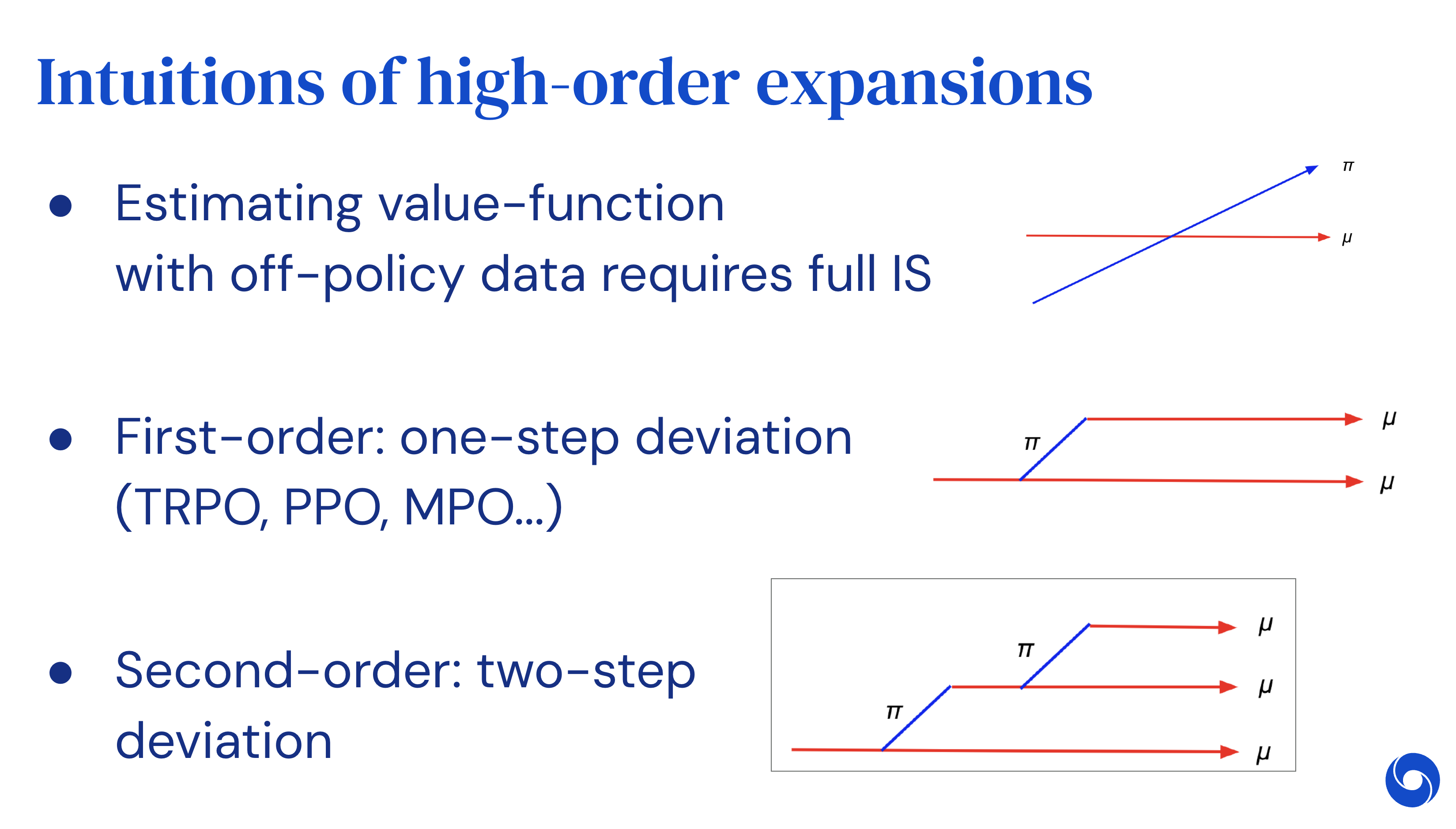

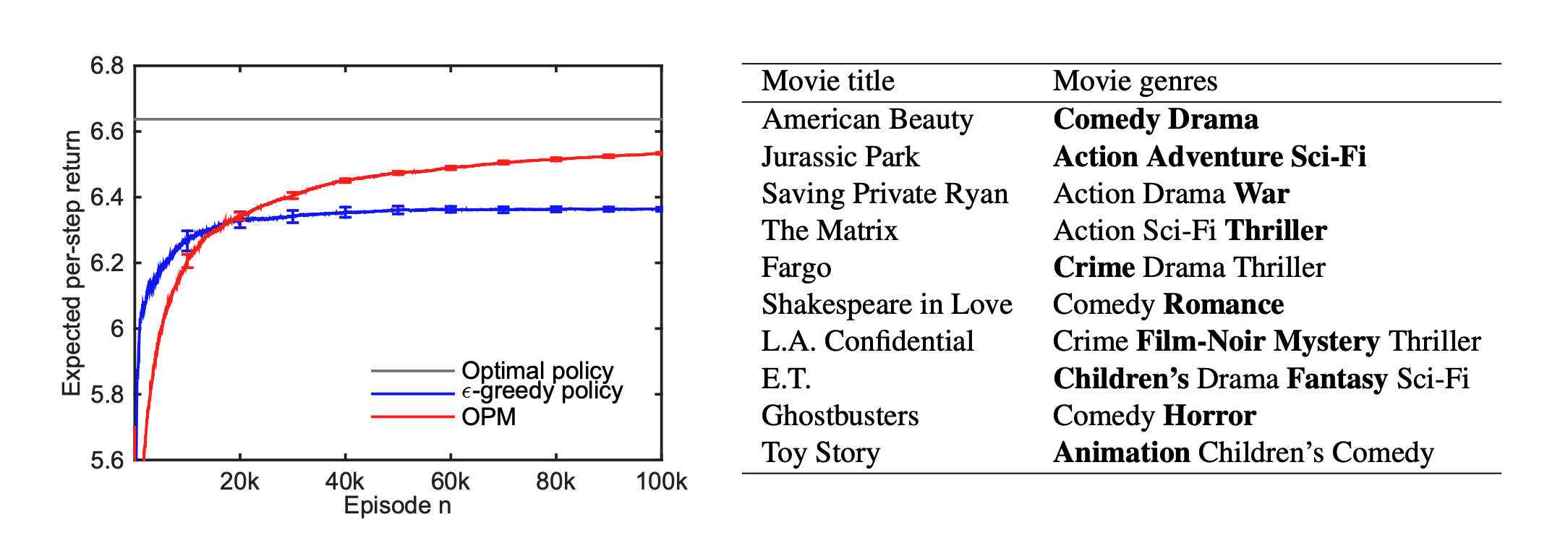

Off-Policy and Value Function Learning Completed

We develop methods for off-policy reinforcement learning and value function estimation. Our contributions include UCB Momentum Q-learning that corrects bias without forgetting, unified gradient estimators for meta-RL via off-policy evaluation, marginalized operators for off-policy RL, VA-learning as an alternative to Q-learning, Taylor expansion of discount factors and policy optimization, and revisiting Peng's Q(λ) for modern RL. These methods improve sample efficiency and stability in off-policy settings.

Stochastic Shortest Path and Reward-Free Exploration Completed

We study exploration in reinforcement learning when no reward function is provided. We develop algorithms for stochastic shortest path problems with minimax optimal regret bounds that are parameter-free and approach horizon-free. Our work includes provably efficient sample collection strategies, incremental autonomous exploration, goal-oriented exploration, and adaptive multi-goal exploration. These methods enable agents to efficiently explore unknown environments before any task is specified.

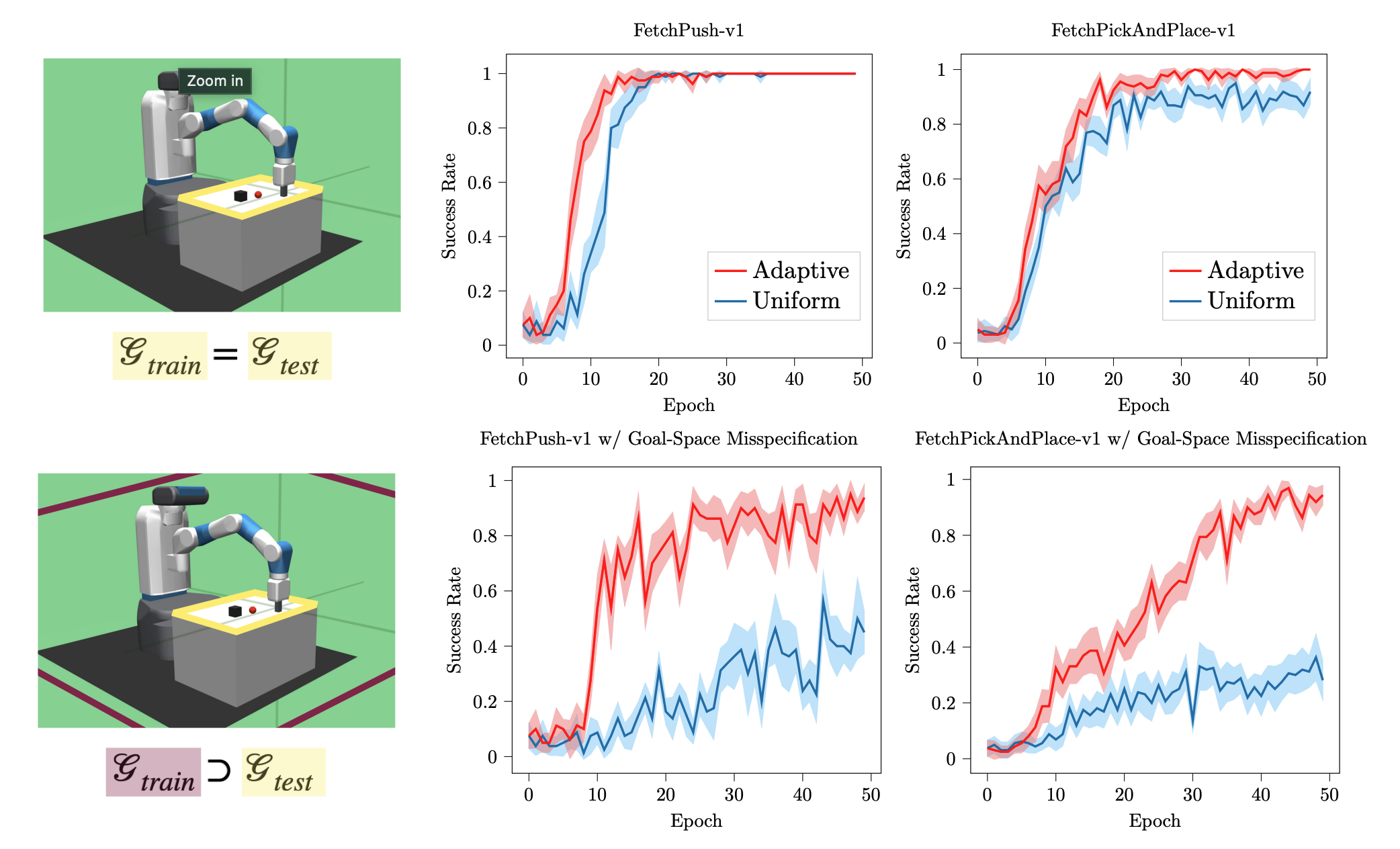

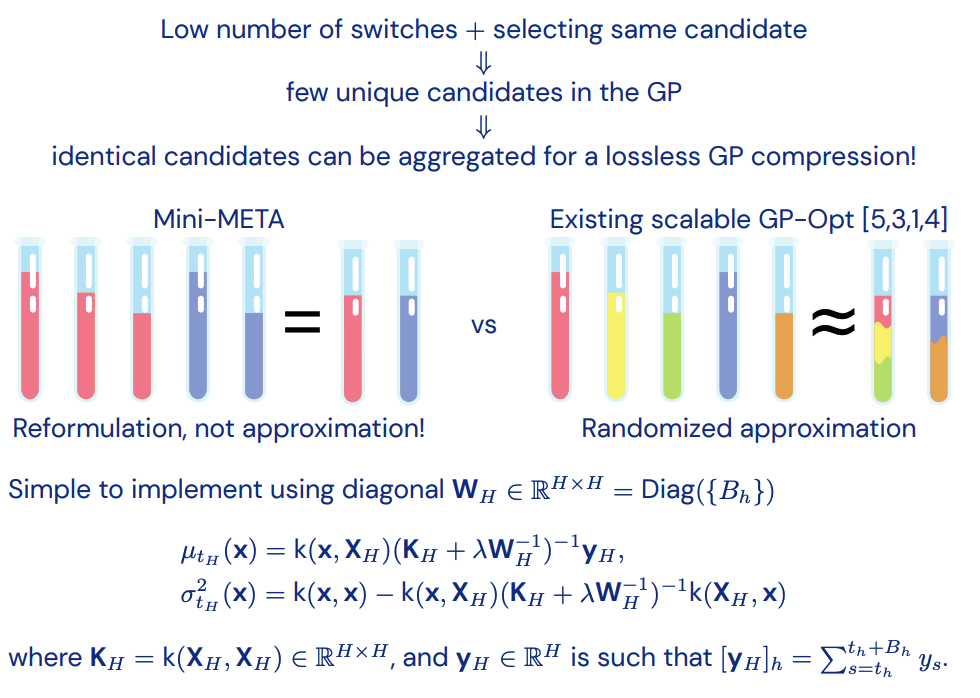

Gaussian Process Optimization and Kernel Methods Completed

We develop scalable methods for Gaussian process optimization and kernel-based reinforcement learning. Our contributions include adaptive sketching techniques that achieve near-linear time complexity, methods for evaluating candidates multiple times to reduce switch costs, and kernel-based approaches for non-stationary reinforcement learning. We also provide finite-time analysis of kernel-based RL and study episodic RL with minimax lower bounds in metric spaces.

Determinantal Point Processes (DPPs) Completed

Determinantal point processes (DPPs) are probabilistic models for selecting diverse subsets of items. We develop efficient sampling algorithms for DPPs, including exact sampling with sublinear preprocessing, zonotope hit-and-run methods for projection DPPs, and applications to Monte Carlo integration. We also create DPPy, a Python toolbox for DPP sampling, and study fast sampling from β-ensembles. Our methods enable scalable diverse subset selection in machine learning applications.

Combinatorial Bandits and Influence Maximization Completed

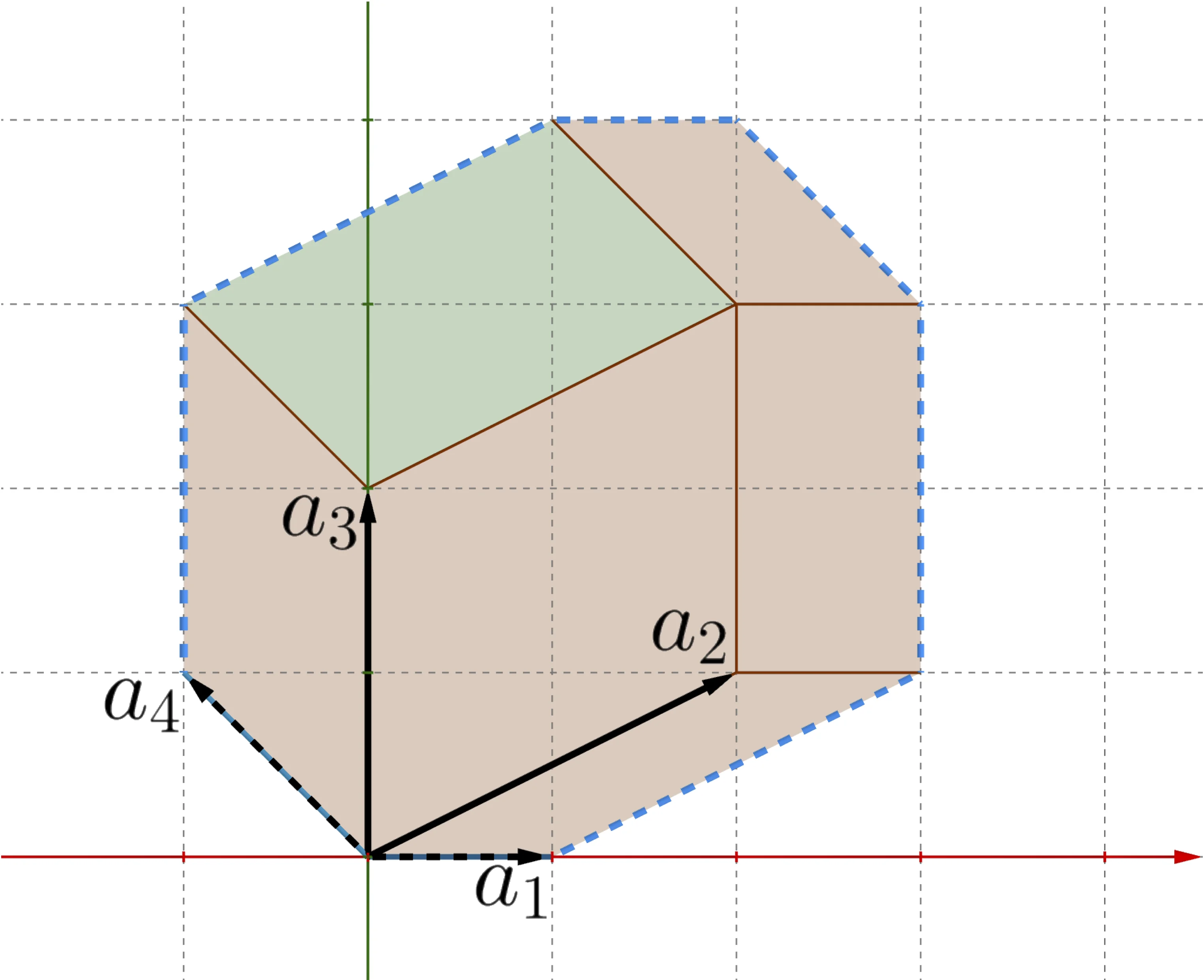

We study online learning in combinatorial settings, particularly influence maximization in social networks. Our work includes online influence maximization under the independent cascade model with semi-bandit feedback, budgeted online influence maximization, efficient algorithms for matroid semi-bandits that exploit structure of uncertainty, statistical efficiency of Thompson sampling for combinatorial semi-bandits, and covariance-adapting algorithms for semi-bandits. We also develop methods for learning to act greedily in polymatroid settings.

Past Projects

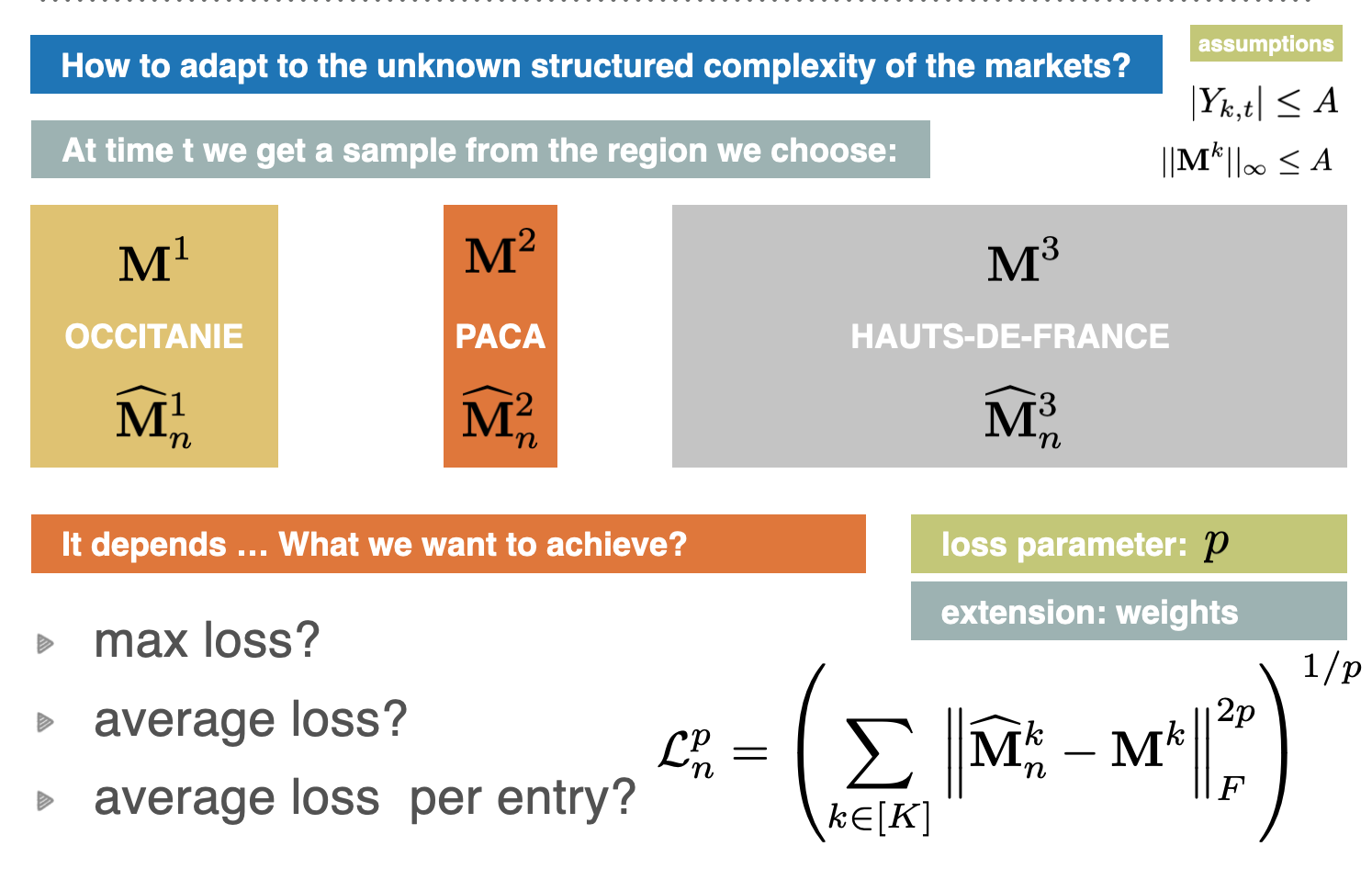

Adaptive Structural Sampling Completed

Many of the sequential problems require adaptive sampling in some particular way. Examples include using learning to improve rejection rate in rejection sampling, sampling with two contradictory objectives such as when we have to trade off reward and regret, extreme and infinitely many-arm bandits, and efficient sampling of determinantal point processes.

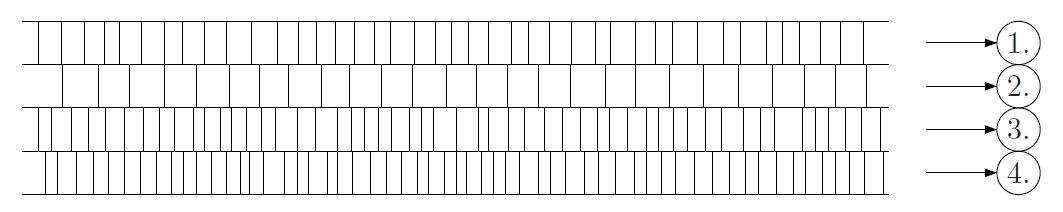

SQUEAK: Online Sparsification of Kernels and Graphs Completed

My PhD thesis ended with an open direction, whether efficient spectral sparsifiers can fuel online graph-learning methods. We introduce the first dictionary-learning streaming algorithm that operates in a single-pass over the dataset. This reduces the overall time required to construct provably accurate dictionaries from quadratic to near-linear, or even logarithmic when parallelized.

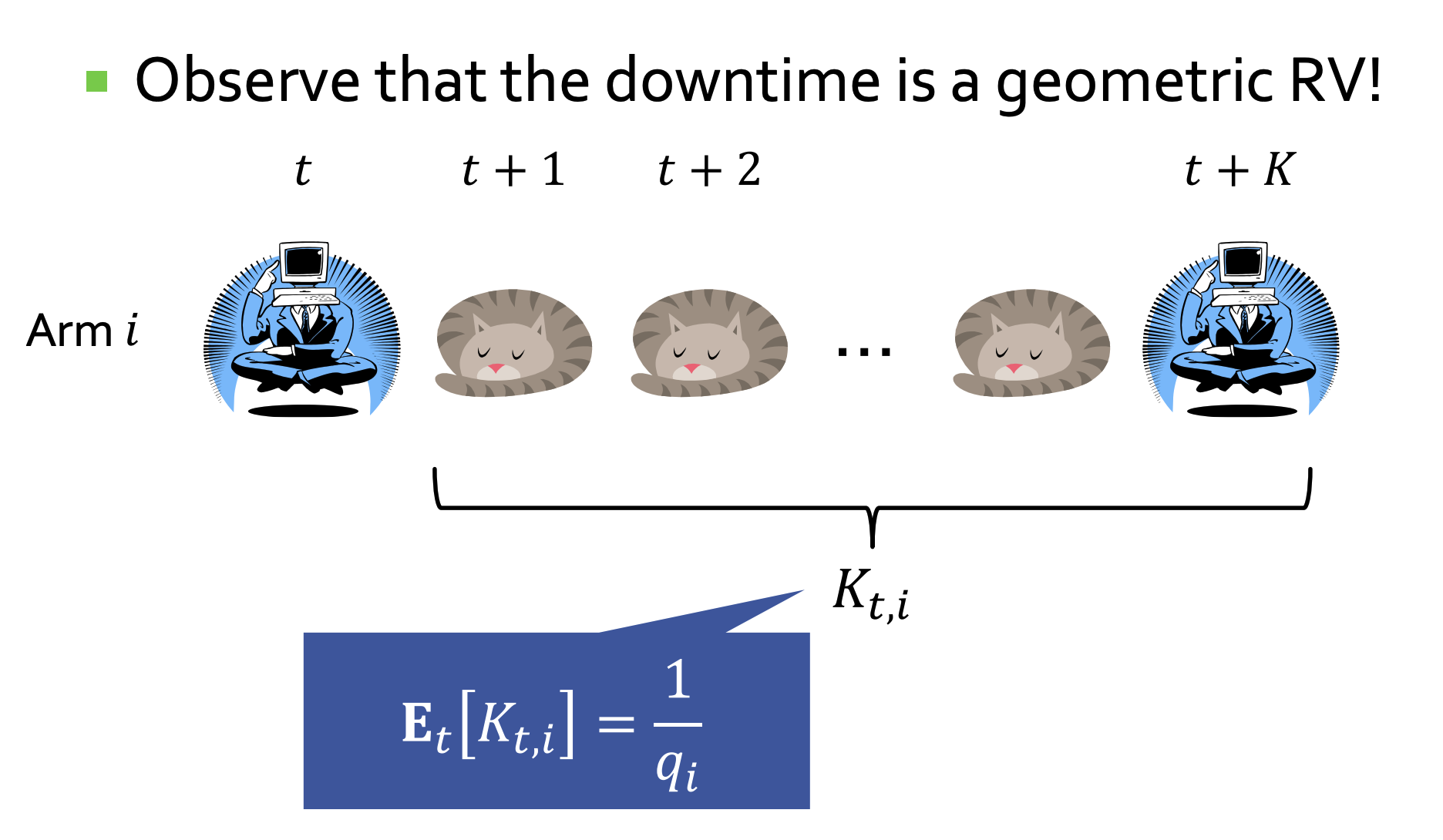

Graph Bandits Completed

Bandit problems are online decision-making problems where the only feedback given to the learner is a (noisy) reward of the chosen decision. We study the benefits of homophily (similar actions give similar rewards) under the name spectral bandits, side information (well-informed bandits), and influence maximization (IM bandits). In the algorithms, we take advantage of these similarities in order to (provably) learn faster.

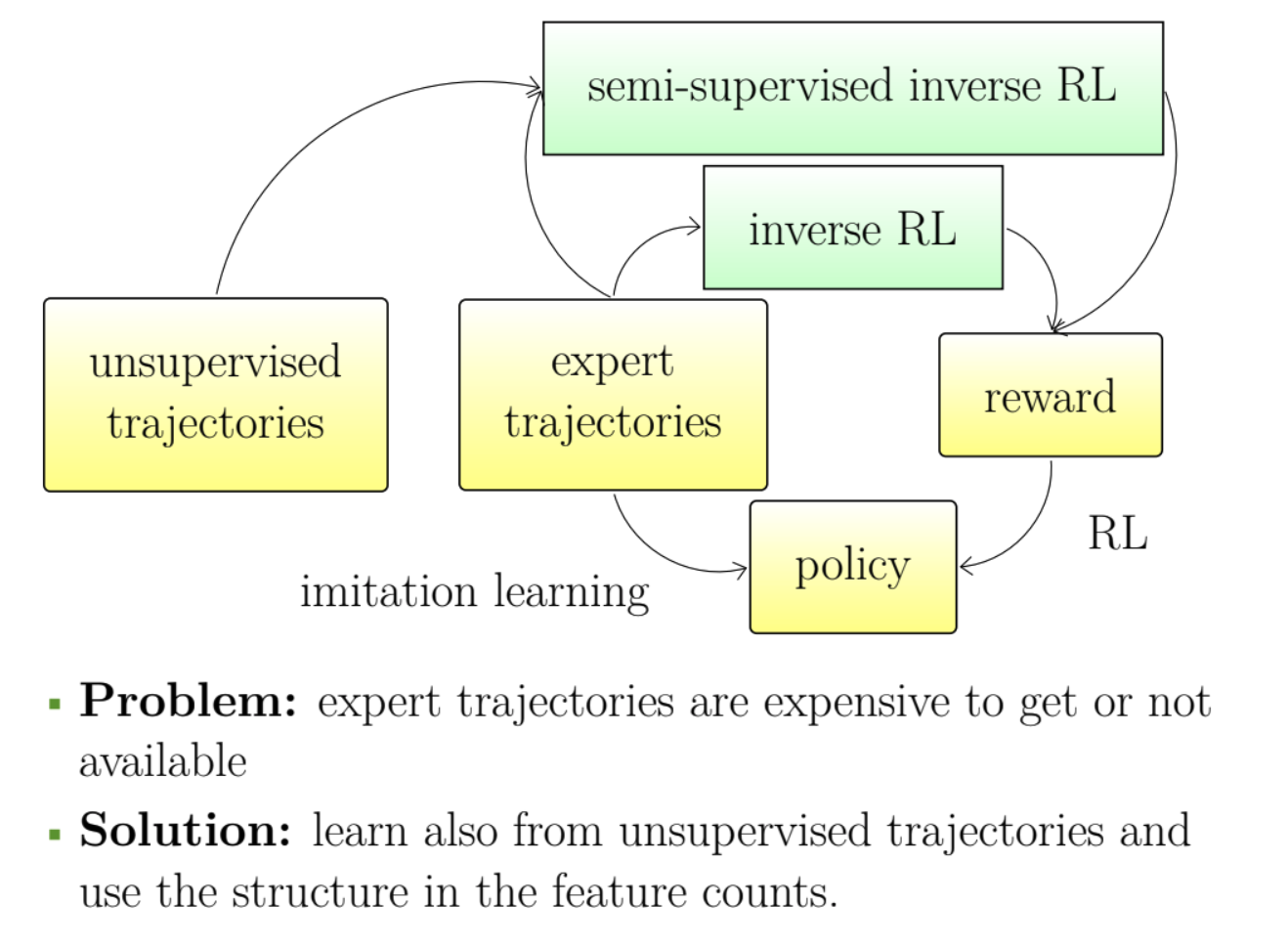

Semi-Supervised Apprenticeship Learning Completed

In apprenticeship learning we aim to learn a good behavior by observing an expert. We consider a situation when we observe many trajectories of behaviors but only one or a few of them are labeled as experts' trajectories. We investigate the assumptions under which the remaining unlabeled trajectories can aid in learning a policy with a good performance.

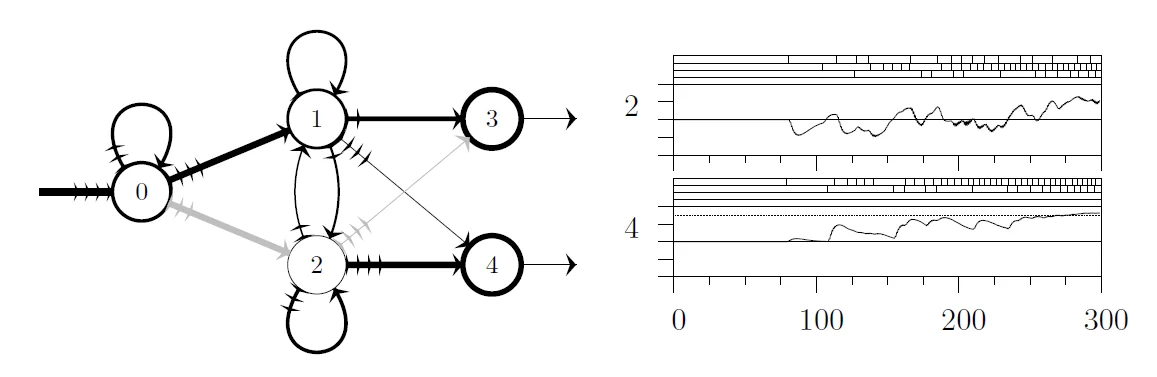

CompLACS: Composing Learning for Artificial Cognitive Systems Completed

The purpose of this project was to develop a unified toolkit for intelligent control in many different problem areas. This toolkit incorporates many of the most successful approaches to a variety of important control problems within a single framework, including bandit problems, Markov Decision Processes (MDPs), Partially Observable MDPs (POMDPs), continuous stochastic control, and multi-agent systems.

Large-Scale Semi-Supervised Learning Completed

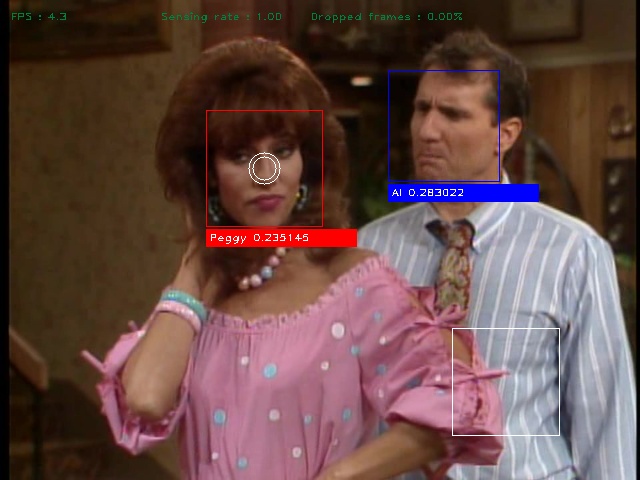

We parallelized online harmonic solver to process 1 TB of video data in a day. I am working on the multi-manifold learning that can overcome changes in distribution. I am showing how the online learner adapts as to characters' aging over 10 years period in Married... with Children sitcom. The research was part of Everyday Sensing and Perception (ESP) project.

Online Semi-Supervised Learning Completed

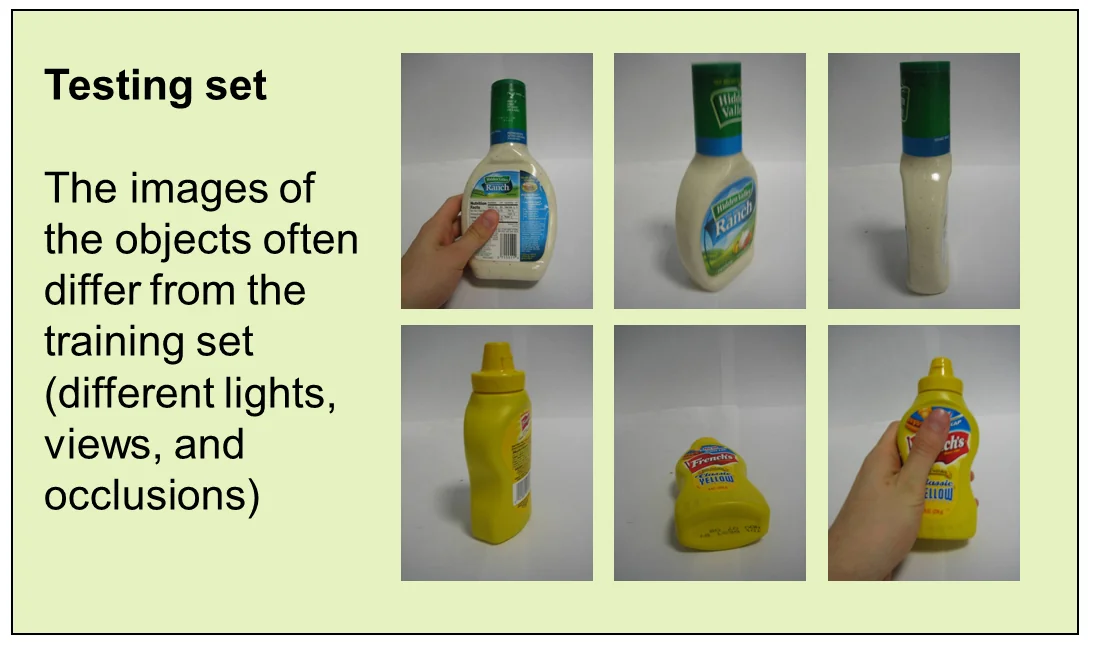

We extended graph-based semi-supervised learning to the structured case and demonstrated on handwriting recognition and object detection from video streams. We came up with an online algorithm that on the real-world datasets recognizes faces at 80-90% precision with 90% recall.

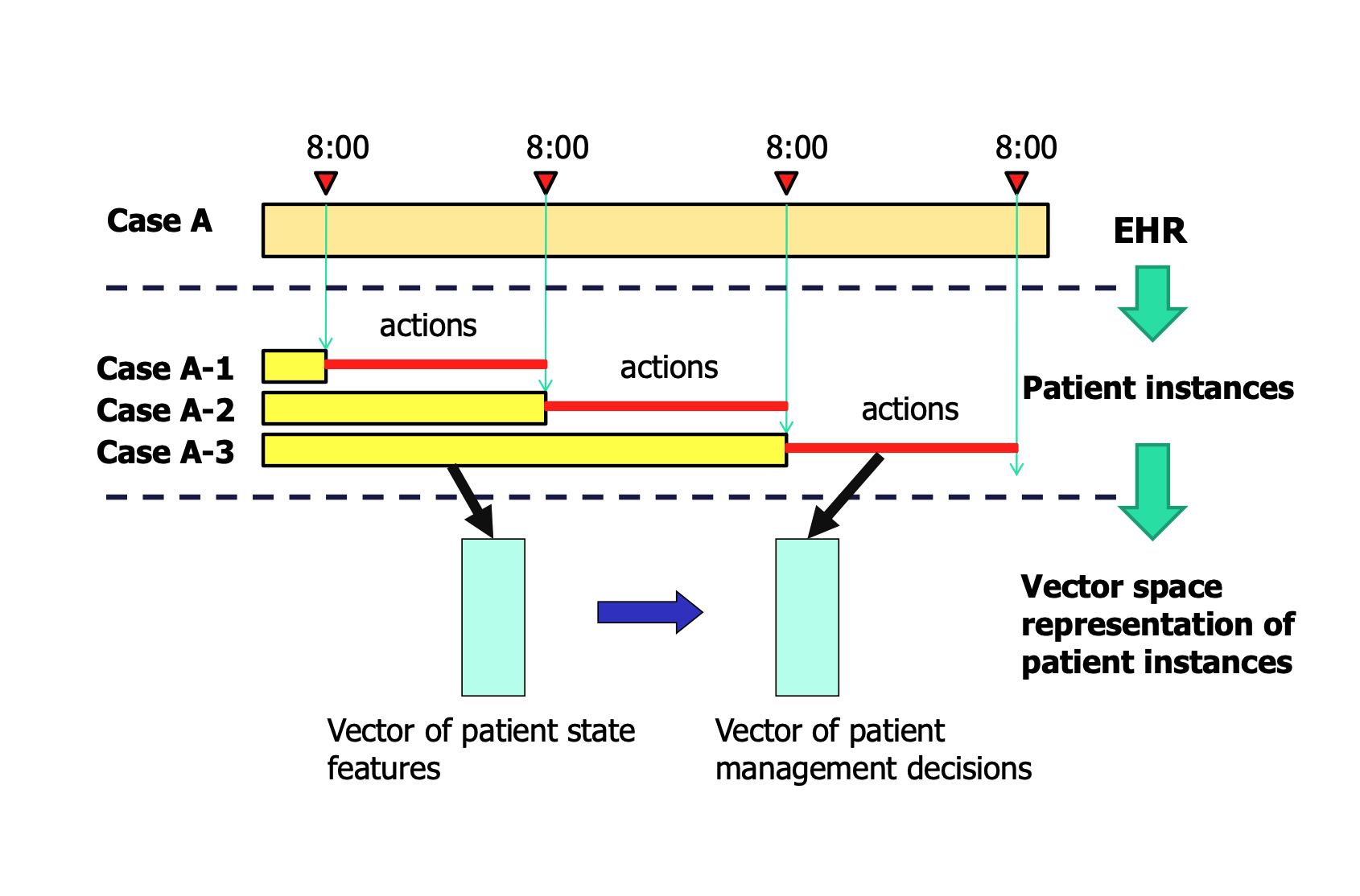

Anomaly Detection in Clinical Databases Completed

Statistical anomaly detection methods for identification of unusual outcomes and patient management decisions. I combined max-margin learning with distance learned to create an anomaly detector, which outperforms the hospital rule for Heparin Induced Thrombocytopenia detection. I later scaled the system for 5K patients with 9K features and 743 clinical decisions per day.

Odd-Man-Out Completed

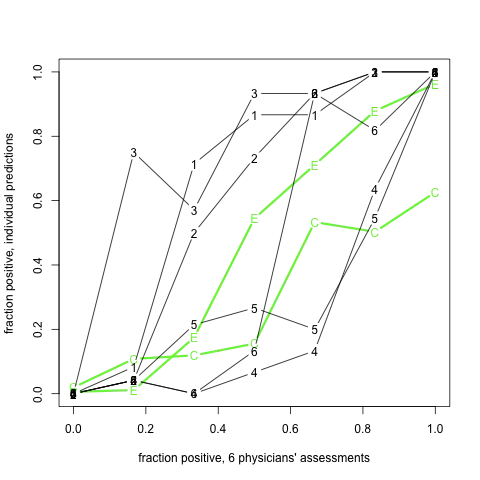

We hypothesized that clinical data in emergency department (ED) reports would increase sensitivity and specificity of case identification for patients with an acute lower respiratory syndrome (ALRS). We designed a statistic of disagreement (odd-man-out) to evaluate the machine learning classifier with expert evaluation in the cases when the gold standard is not available.

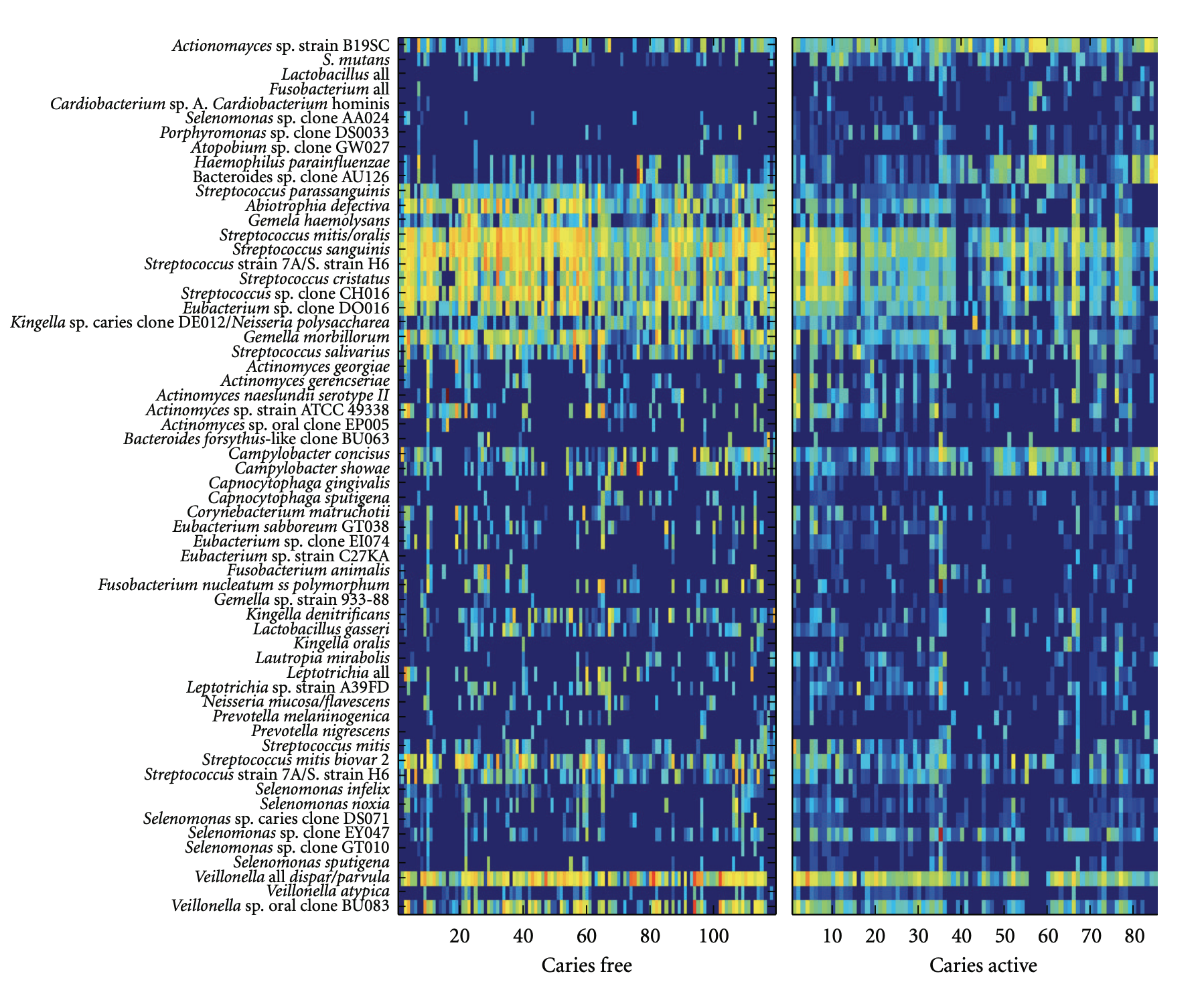

High-Throughput Proteomic and Genomic Biomarker Discovery Completed

I built a framework for the cancer prediction from high-throughput proteomic and genomic data sources. I found a way to merge heterogeneous data sources: My fusion model was able to predict pancreatic cancer from Luminex combined with SELDI with 91.2% accuracy.

Archive

Evolutionary Feature Selection Algorithms Completed

We enhanced the existing FeaSANNT neural feature selection with spiking neuron model to handle inputs noised with up to 10% Gaussian noise.

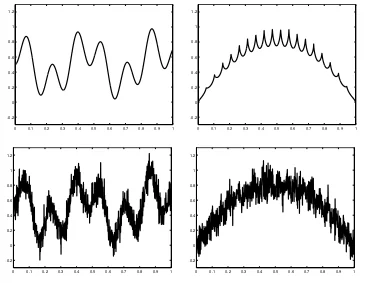

Plastic Synapses (Regularity Counting) Completed

We were modeling basic learning function at the level of synapses. I designed a model that is able to adapt to the regular frequencies with different rate as the time flows. I used genetic programming to find biologically plausible networks that distinguish different gamma distribution and provided explanation of the strategies evolved.

Algebraic Structures

Implementation of algebraic structures hierarchy (groups, rings, fields) in Smalltalk. Features modular arithmetic didactic tools, RSA encryption demonstration, and primality testing. A university course project exploring OOP design patterns.

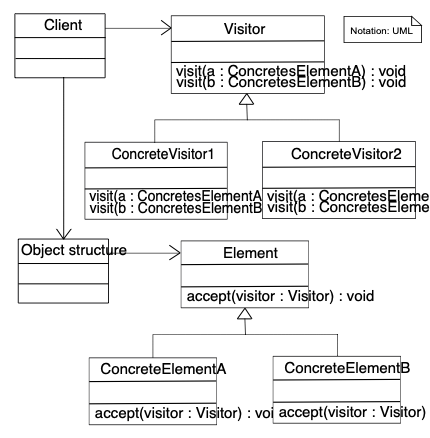

Double Dispatching

An explanation of the Double Dispatching design pattern in Smalltalk. This OOP technique elegantly solves polymorphism with parameters by reducing uncertainty step-by-step through secondary method dispatch, replacing conditional constructs with robust polymorphic code.

Pexeso (Voice-Controlled Memory Game)

A memory matching game for children with voice control capability. Built with GTK on Linux, featuring custom speech recognition using HTK. Includes Finding Nemo and Flags card sets. Presented at a school for gifted children in Bratislava.

Wget Presentation

A presentation (in Slovak) about GNU Wget - the powerful non-interactive command-line tool for downloading files via HTTP, HTTPS, and FTP. Covers resume, mirroring, recursive downloads, proxy support, and configuration tips.

ŠKAS - Student Council Work

Work as a member of the Student Chamber of the Academic Senate (ŠKAS) at FMFI UK. Successfully advocated for changes to study regulations: flexible credit requirements for final year students, guaranteed 5-week exam period, removal of prerequisites as hard blocks, and student-friendly policies.

University Coursework Archive

2000 – 2005Collection of course assignments and projects from Comenius University (FMFI UK). Includes work on CORDIC algorithms, game theory (prisoner's dilemma), Hilbert's program, computational linguistics, neurocomputing simulations, neural networks, and more.

Concross - Consonant Crosswords

A Delphi application for creating and solving consonant crossword puzzles (spoluhláskové krížovky). Features puzzle creation mode, solving interface, and educational value for language learning.

HTML Editors Review

A review of HTML editors and web development tools from the late 90s, including Macromedia Dreamweaver 3, CoffeeCup, HotDog, and others. Compares WYSIWYG vs. code-based approaches to web design.

How to Tie Ties

A visual step-by-step guide to tying three classic tie knots: Manhattan (Four-in-Hand), Windsor, and Butterfly (Bow Tie). Created as one of my first web projects.

Miscellaneous

A collection of miscellaneous personal content: travels around Europe and USA, sports at Pitt CS department (volleyball & basketball), movie tracker, mystery postcards, legacy images, old portrait photos, and project logos from the early web days.

Music Collection

Archive of my music collection from the early 2000s, featuring R.E.M. albums (from Murmur to Reveal), Jaromír Nohavica, Édith Piaf, and various singles including Queen, Offspring, and Pink Martini.

SOČ: Internet

High school research project (SOČ - Stredoškolská odborná činnosť) about the Internet, written in Slovak. Covers Internet basics, protocols (TCP/IP, DNS), network services (email, Telnet, FTP, WWW), and the social impact of the Internet in the late 1990s.

Splash!

A nostalgic 90s-style internet splash page featuring original artwork. A snapshot of early web aesthetics and personal expression from the dial-up era.

Slovak Math & Physics Competitions Archive

Archive of Slovak mathematical and physics competition materials from high school years. Contains problem sets, solutions, and seminar materials from 1999-2002.

SKMS Website

Website for Stredoslovenský korešpondenčný matematický seminár (Central Slovak Correspondence Math Seminar). Features interactive star rating system and seminar archives.

Oktava2000 Discussion Forum

PHP-based discussion forum and community platform created during high school years. Features debate system, user management, and custom styling. Archive preserves messages from 2003 and group collage.